Several years ago, I worked on a high-profile project that had some challenging requirements. The customer needed to be able to bring up a complete development environment within 10 minutes. Each virtual machine needed a large number of software applications installed and configured. They wanted to be able to ensure that the operating system was always up to date, but they did not want to manually create or maintain images.

Interestingly enough, the problem is similar to one many that customers face when using GitHub’s Actions Runner Controller (ARC) or a self-hosted runner. In both cases, you have to take responsibility for the image, ensure that it has the right tools installed and configured, and keep the tools and operating system up-to-date.

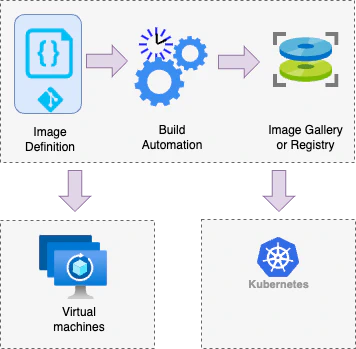

The solution to both of these needs is the same. You need to adopt the image factory pattern. An image factory builds and distributes images, installing and configuring any required software. Naturally, this process relies on infrastructure-as-code. The image definition is checked into source control. An automated process then uses that definition to “bake” the image. The base image is typically one that is updated regularly, so the operating system (or for Docker, the installed components) are kept up-to-date. The installation and configuration of the software is a one-time cost paid at build time, so startup time is minimized for VMs. Since the process is completely automated, it has little or no ongoing maintenance cost.

The basic pattern is as simple as it sounds:

Virtual machines

For virtual machines, there are a few approaches that can be used. If you’re Azure DevTest Labs, you can create formulas that combine a base image with artifacts. Once the VM has been configured, the image can be captured and reused as the based for creating other VMs. In fact, the image factory pattern is natively supported. There’s even a walkthrough of implementing the pattern using Azure DevOps. There’s also a video created by Peter Hauge (Microsoft) in 2017 that explores the factory pattern and creating factories in DevTest Labs.

The more common approach is to build the image using Packer. The GitHub Runner images are an example of this approach. The image templates are stored in the repository. Using a scheduled workflow, Packer is executed with the template. A remote machine is created; Packager configures the box, captures the image, and deletes the running virtual machine. The image can then be stored for use in creating VMs. If you’re using Azure, this can all be completed with managed services. Microsoft even provides a walkthrough of creating an publishing an image from a Packer template.

This approach also works well for VMWare. In fact, Packer can be used with ESXi or vSphere to generate and capture images automatically. These images are stored on the host as templates, and these templates can be used to automatically create usable virtual machines. If you want to explore this further, VMWare provides sample Packer templates.

In each of these cases, the created virtual machines should be short lived. When a new VM is needed, it can be created from the latest version of the template or image. It helps in these cases to keep the data disk(s) separate from the imaged OS disk. This ensures that the data from the former environment is available in the new one.

For longer lived servers that are continuously updated, consider a blue-green deployment pattern. First, deploy the latest version of the image, migrating any required data to the new instance. Next, change the networking to ensure the new instance is receiving the traffic. Finally, when you are satisfied that everything is running as expected, deallocate the older virtual machine.

Docker (OCI) images

The same principle also applies to Docker (OCI) images and Codespaces. For container images, the approach is structured and standardized, so most developers are already familiar. You define a Dockerfile that builds the image and then publish the image to a registry. Doing this on a regular schedule ensures that the base image is continuously updated, patching any vulnerabilities or bugs that may exist.

Docker also has two advantages. First, it supports multi-stage builds. This allows you to build multiple images and layers in parallel, then selectively use components of those environments in the final image. This can result in significantly smaller (and more secure) images. It can also do a multi-platform build, creating an image that works across multiple architectures. That said, multi-platform support relies on QEMU emulation (which can be incredibly slow).

While you can use the latest tag to always pull the most current version, the recommended approach is to explicitly specify the desired version. This ensures consistent, repeatable deployments. If you’re using Kubernetes, this can mean updating the Pod to use a new image.

Chaining factories

One of the more powerful approaches to this pattern is chaining together multiple factories. The image generated by one factory becomes the base image of the next. This allows you to compose complex solutions while minimizing the time required to build the image. This principle applies to both virtual machine images and Docker/OCI images.

As a simple example, let’s assume you need images for three applications that each require a properly configured database server on the image. First, create a factory that builds a base image that contains the database server. Next, create a factory for each application which configures the required database and installs the application. By doing this, the database server only needs to be installed during one build; that process can be handled centrally. The application installs can then execute in parallel, with each one able to assume a database server is already installed and available.

Combining factories

For complex environments, it can make sense to utilize multiple factories to support a single set of systems. The canonical example of this is Kubernetes. To keep K8S up-to-date, the individual nodes can be maintained with an image factory. The nodes can then be updated automatically on a regular schedule. Similarly, the containers running on this nodes can also be maintained using one or more image factories. For GitHub ARC, this approach can ensure that the environment is continuously updated, properly secured, and always has the latest runner versions.

Welcome the factory

I’m sure as you explore the pattern, you’ll find a number of other uses for it. By automating the management and distribution of images, you create a repeatable process that creates optimal environments for your VMs and containers. Have fun exploring and happy DevOp’ing!