Recently, I’ve had multiple conversations with teams that planned to self-host their GitHub Runners. Most of these teams were planning to use Azure Kubernetes Service (AKS) to make this easier to maintain. In each case, one of the primary drivers was to improve the availability of their runners. They supported runners for multiple teams in their organizations, and they wanted to offer a higher service-level agreement (SLA) than what GitHub offers.

Unfortunately for each of these teams, I had to explain that the math works against them. They can’t exceed the GitHub SLA. Let me explain why.

It begins with understanding network math and how to calculate availability.

Dependencies

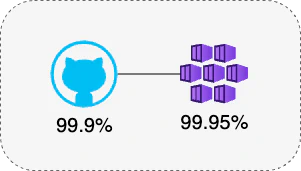

When you have a services or components that depends on another, the system is unavailable if either component is unavailable. We can calculate the availability by multiplying the two availability levels. As a practical example, let’s consider Azure Kubernetes Service. It has an availability of 99.95% (if you’re using multiple availability zones). If that is hosting GitHub self-hosted runners, it depends on the GitHub services being available. GitHub offers an SLA of 99.9%.

So if we just consider this simple scenario, we have an availability of 99.85%. By adding another potential point of failure, we’ve lost 0.15% of potential availability (4 hours, 21 minutes per year).

Redundant services

When you have redundancy, it approves the availability of the system. As long as one component is available, the system is available. To calculate the availability, we need to know the chance of the parallel components failing at the same time. We can then multiply each components chance of failure to determine the chance of the system failing. The chance of a single component failing is 100% - availability.

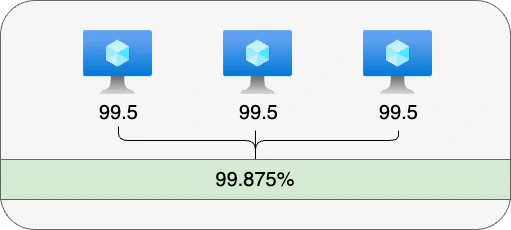

As an example, consider a three-node virtual machine (VM) cluster. We only need one member of the cluster to be available. For this scenario, assume a 99.5% availability for each VM.

An availability of 99.5% means there is a 0.5% chance of failure. That means the chance of all three failing at the same time is:

Subtracting this from 100% gives us the probability that at least one of the VMs are available:

This has improved the availability of the system by more than 22 hours per year!

Complex SLA

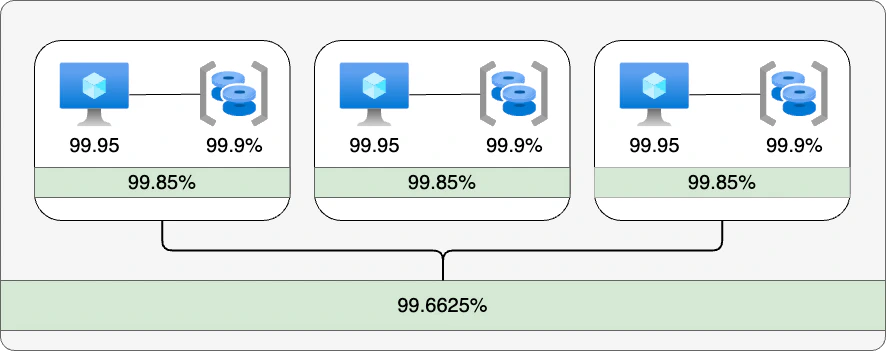

Now that you understand the basics, it’s easier to calculate a more complex situation. For example, assume you have a cluster with three VMs (99.95% availability) which each rely on a managed disk (99.9% availability).

Each VM requires its disk to be available, so there is a dependency. The composite availability of the VM and its disk:

If only one of these nodes has to be available, we can calculate the availability of the cluster as:

Azure VMs

Azure VMs are actually calculated somewhat differently. Azure guarantees that when virtual machines are deployed across two or more availability zones, you will have a of 99.99% chance of reaching at least one of those VMs. Behind the scenes, this configuration allows Microsoft to offer a higher SLA.

Most VMs require a managed operating system disk and often utilize a data disk. Managed disks don’t have a financially backed SLA. The availability is related to the underlying storage; we can assume 99.9% for local-redundant or zone-redundant storage. There are typically three disk roles in a virtual machine: the operating system, the temp disk, and an attached data disk. The temporary disk is attached and not managed, so it’s availability is tied to the VM. An ephemeral operating system disk is locally attached, so it is part of the local host VM. Data disks and non-ephemeral operating system disks are managed, so they have a 99.9% availability.

For AKS nodes,

an ephemeral OS disk is recommended. This is also the default configuration. The OS disk or temporary disk is used for logs and container data (including emptyDir), providing improved performance and reliability. If a three-node cluster is deployed across three availability zones, it will have a 99.99% availability. The trade off is a lack of redundancy — the data only exists on the node and can be lost.

AKS, ARC, and storage

GitHub ARC can use this default configuration. If the workflows running in ARC need access to containerized services, most customers opt to use Kubernetes mode. This requires a persistent volume claim. This in turn requires storage using Azure Files (Premium), Azure NetApp Files, or managed disks (Premium or Ultra recommended).

Disks (99.9% SLA) are recommended for performance, but are limited to being read or written by a single node. Azure Files Premium (99.99% SLA) is recommended for simultaneous access from multiple nodes, but can be an order of magnitude slower than managed disks. When Kubernetes mode, we can assume that availability requires both a VM and a managed disk:

This reflects that Azure guarantees at least one VM is available 99.99% of the time, but availability of the node and it’s managed disk are required for the the services we need to be available.

SLA for ARC on AKS

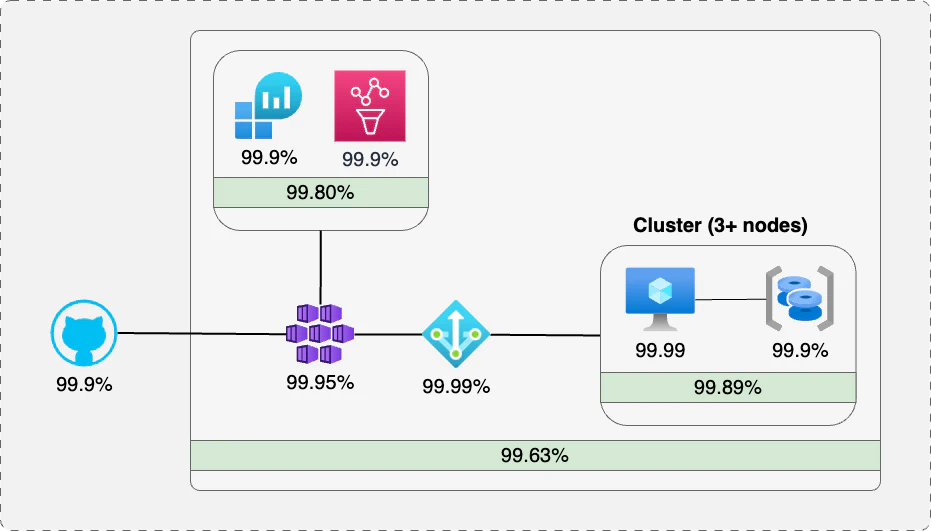

Let’s examine a more complete solution for running ARC on AKS. For these calculations, I’ll make a few assumptions:

The AKS nodes are deployed across multiple availability zones

The AKS instance is only used for ARC runners

Kubernetes mode is being used, and managed disks are being used to provide the necessary persistent volume.

An Azure NAT Gateway is used to avoid SNAT exhaustion. This component has an SLA of 99.99% (excluding SNAT exhaustion)

Azure components without an SLA will be ignored and treated as 100% available

To be able to respond to alerts and monitor in real time, we want both Azure Monitor Alerts (99.9% SLA) and managed Prometheus (99.9%) to be available. The composite SLA for those services is:

Calculating the availability for the combined services (except monitoring):

And if we require the monitoring to be available:

For this cluster design, we’ve calculated a 99.53% availability. This is a significant drop from the 99.9% availability (8 hours, 41 minutes of annual downtime) of a GitHub-hosted runner! In concrete terms, that’s 1 day 16 hours 51 minutes of downtime versus 8 hours 41 minutes of downtime!

High availability

Let’s consider another approach with a highly available configuration. We’ll add some assumptions:

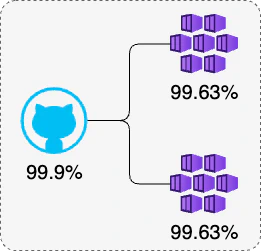

- The Azure environment from before (with a 99.63% composite availability) is replicated to a second region

- If either region is avaible, the service is available

This is how ARC gains availability. It relies on having an active-active configuration that is running in multiple regions. If one system is unavailable, the other should continue to work.

This changes the availablity to 99.8986% (8 hours, 48 minutes of annual downtime):

Still not the same as GitHub-hosted, but very similar. Of course, increasing availability always adds additional cost. In this case, we’ve doubled the cost of the Azure environment to improve availability. If we add a third region, it’s possible to drive the number up to 99.89999%. Whether or not this makes financial sense depends on the cost of downtime for your organization.

Achieving availability

Unfortunately, this makes it clear that there’s mathematically no way to get beyond the 99.9% availability offered by GitHub when you host a solution yourself. In fact, the only way to get to 99.9% availability with self-hosted runners is to have provide 100% availability for the self-hosted runners (which, practically speaking, is impossible).

These calculations haven’t factored in hosting the Helm charts or container images or network availability. Each new component further decreases the availability. Out calculations also assume a team with strong understanding of managing and maintaining both the Azure infrastructure and the Kubernetes environment to optimize performance, troubleshoot problems, and minimize downtime. Without that expertise, the availability will be even lower. There’s a lot involved in selecting the right infrastructure, configuring Kubernetes properly, and validating your decisions by continuously monitoring the environment.

These compromises are why cloud providers offer managed solutions. They can provide a higher level of availability at a lower cost than most users can typically achieve on their own. It also takes advantage of the expertise of infrastructure experts who can optimize the environment for the specific workload (and support it at scale).

To be clear, this does not mean that ARC is never a fit. It can still be a solution to challenges such as providing access to resources in isolated networks. It simply means that you need to be aware of the tradeoffs you’re making when you decide to use it. It also means that if SLA is the primary concern, you should consider GitHub-hosted runners.