There’s a lot of documentation around using Azure Data Factory, but surprisingly little on implementing DevOps practices. There’s even less when it comes to implementing an automated workflow. Typically, the system expects you to design and build the pipelines within the provided user interface, then press Publish.

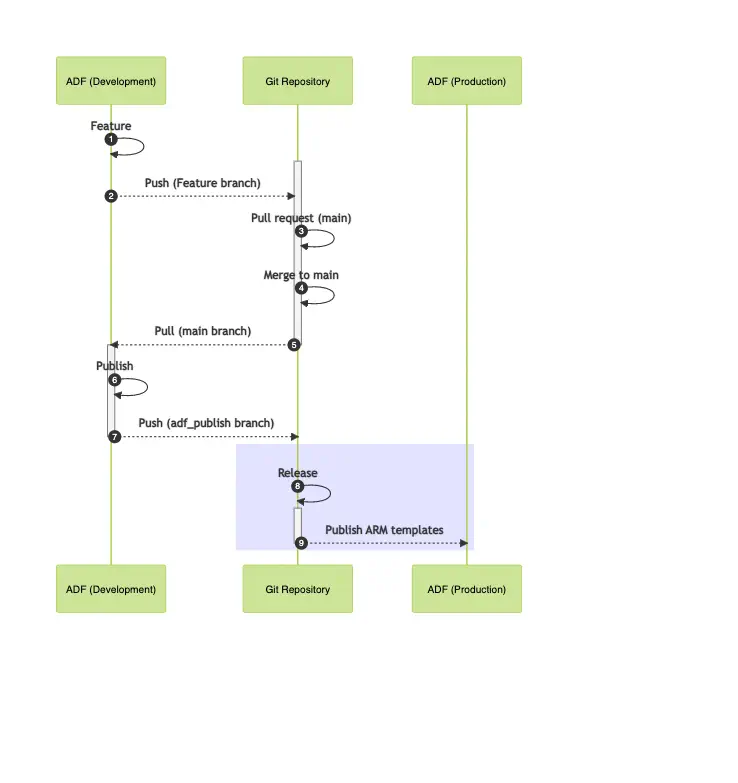

The Native CI/CD Process

Under the covers, edits in ADF create a series of JSON files which stores the configuration. Changes in the portal are stored to these files. When the configuration is published, those JSON documents are used to generate ARM templates. If ADF is configured to use a Git repository, a copy of the published templates is pushed to the publish branch (typically, adf_publish). This branch can then be reused for automation and deployment to other environments.

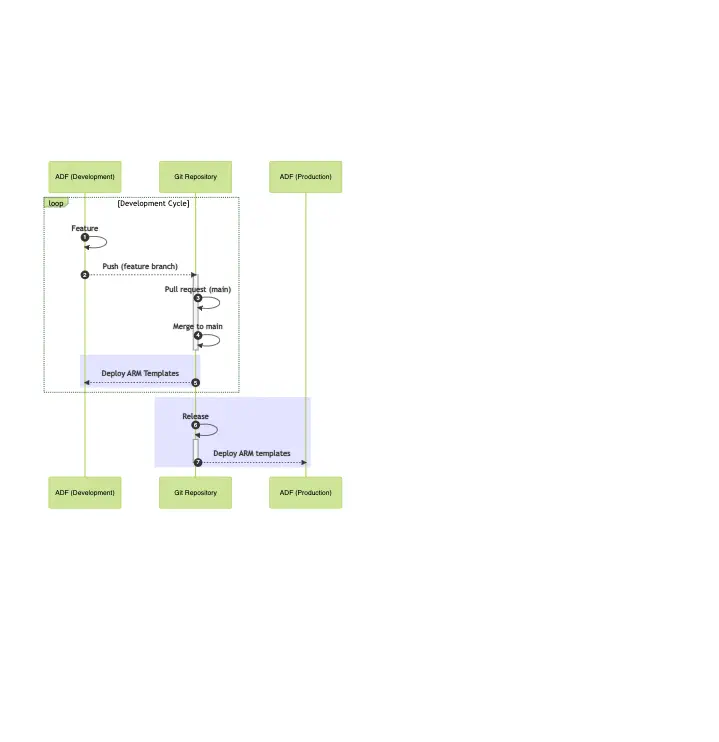

Improving the CI/CD Process

Alternatively, it’s possible to rely on a workflow that is completely automated. Instead of using the default behaviors, pull requests can be used to merge and deploy the code to both the development ADF environment and any additional environments. This is possible thanks to an NPM library that Microsoft provides which can reproduce the ADF template generation process, @microsoft/azure-data-factory-utilities.

Implementing the Process

Implementing the workflow for Azure DevOps is detailed by Microsoft. If we want to implement it for other systems, the process is very similar. The publication process follows these basic steps after manually generating ARM templates from the source JSON files

- Stop any running ADF triggers

- Deploy the ARM templates

- Delete any orphaned resources

- Restart the ADF triggers

In order to automate the deployment, you will need the complete identifier for the ADF resource instance (from its properties). For example:

/subscriptions/2c8b8125-c9d0-4d17-811d-33a49b8349da/resourceGroups/MyResourceGroup/providers/Microsoft.DataFactory/factories/MyFactory

With that, you can use Node.js to validate the JSON files created by ADF and generate ARM templates from those files. For example, we can create a unified GitHub workflow that validates the ADF JSON files during a pull request and generates the ARM files on a push:

1 name: Publish ADF

2

3 on:

4 push:

5 branches: [ "main" ]

6 pull_request:

7 branches: [ "main" ]

8

9 env:

10 DATA_FACTORY_ID: /subscriptions/2c8b8125-c9d0-4d17-811d-33a49b8349da/resourceGroups/MyResourceGroup/providers/Microsoft.DataFactory/factories/MyFactory

11

12 jobs:

13 build:

14 runs-on: ubuntu-latest

15 steps:

16 - uses: actions/checkout@v3

17

18 # Always ensure an explicit version of Node

19 # The NPM package requires Node 14 or higher

20 - name: Setup Node.js environment

21 uses: actions/setup-node@v3.5.1

22 with:

23 node-version: 16.x

24

25 # Install the ADF Utilities

26 - name: Install the utilities

27 run: npm install @microsoft/azure-data-factory-utilities

28

29 # Validate the ADF JSON files

30 - name: Validate Files

31 run: node ./node_modules/@microsoft/azure-data-factory-utilities/lib/index validate "${{ github.workspace }}" "${{ env.DATA_FACTORY_ID }}"

32

33 # If it's a push, generate the ARM templates. The --preview

34 # option is used to create newer PowerShell scripts that stop

35 # only the triggers that have been updated.

36 - name: Generate Files

37 if: ${{ github.event_name == 'push' }}

38 run: node ./node_modules/@microsoft/azure-data-factory-utilities/lib/index --preview export "${{ github.workspace }}" "${{ env.DATA_FACTORY_ID }}" "_export"

39

40 # If it's a push, upload the ARM templates to archive

41 # the results and enable the files to be used in other jobs.

42 - uses: actions/upload-artifact@v3

43 if: ${{ github.event_name == 'push' }}

44 with:

45 name: Templates

46 path: ${{ github.workspace }}/_exportAfter that, it’s easy enough to use your favorite command line to deploy the ARM templates.

First, you must make sure you’re running the latest version of the Azure PowerShell module. This exists on the GitHub runners. You can manually install it in other environments using this command:

1 Install-Module -Name Az -Scope CurrentUser -Repository PSGallery -ForceAfter that, you need to run the generated PowerShell pre-deployment script to stop any running triggers (supplying the correct resource group and factory name):

Next, start the deployment using the az CLI (or use Azure PowerShell). Just remember to override any parameters that need to be changed for the environment. For example:

1 az deployment group create --resource-group MyResourceGroup --name deployment --template-file ARMTemplateForFactory.json --parameters ARMTemplateParametersForFactory.json --factoryName ProdADF --linkedSQLServer_connectionString "..."Finally, you need to restart the triggers, cleanup any leftover resources, and cleanup the deployment:

One final tip if you’re interested in seeing how this could be built into an Action. Microsoft has published two Actions that can run these steps:

- Azure/data-factory-export-action@v1.1.0 - Generates the ARM templates. Contrary to the documentation, the current version of the code does not require a

package.jsonto work. - Azure/data-factory-deploy-action@v1.2.0 - Runs all of the steps required to deploy the templates, included executing the necessary PowerShell scripts.

There’s Always a Catch

There is one gotcha with automated deployments to ADF. There’s a long-standing issue with ADF that causes it to reset all of its Git-related settings after an ARM template deployment. It tends to happen when you’re using global parameters in the ARM template. When those are deployed, the parent factory entity is updated, but the Git configuration is not.

Because of this, deployments to a development environment can require those settings to be re-applied. We can do this with a simple set of command line calls for az datafactory:

1 az config set extension.use_dynamic_install=yes_without_prompt

2 az datafactory configure-factory-repo --factory-resource-id "${{ env.DATA_FACTORY_ID }}" --github-config account-name="MyGHHandle" collaboration-branch="main" last-commit-id="${{ github.sha }}" repository-name="MyRepo" root-folder="/" --location="East US" The last-commit-id parameter is not required. Providing that ensures that ADF is aware that the current version of the collaboration branch has been published.

It’s important to know that this is not required when publishing to a production environment. In that case, ADF should not have any association to the Git repository. It should not be a collaboration environment, so it doesn’t need direct access to a Git repository.

And That’s All, Folks!

Hope this has helped you to better understand the features in ADF and how they can be integrated with your favorite CI/CD tool! Have fun exploring these features, and happy Data DevOp’ing!